By Beth Dougherty

In 2022 and 2023, you couldn't go a day without hearing about artificial intelligence (AI). Generative AI tools such as chatbots and art generators had become a central topic in everyday conversations.

But AI in cancer has deeper roots.

Twenty-five years before ChatGPT burst onto the scene, computers started playing a larger role in cancer diagnostics. In 1997, mammograms weren't even digital yet, but computers had started analyzing them. The tools, called expert systems, used hard-coded logic to read digital images of X-ray-based mammograms to look for signs of cancer.

Michael Rosenthal, MD, PhD

On their own, the computers weren't any better at finding cancer than human radiologists. But if you put the two together, they did better as a team, says Michael Rosenthal, MD, PhD, assistant director of radiology in the Pancreas and Biliary Tumor Center at Dana-Farber Brigham Cancer Center.

Over time, radiologists got accustomed to using computer-aided tools for breast cancer diagnostics. When those tools evolved into AI-based tools years later, the clinical workflow — what data goes into the AI, what information comes out, and how clinicians use it — was already defined and familiar.

If AI is known for anything these days, it is disruption. But so far, AI-based cancer diagnostic tools haven't been very disruptive at all. They've fit rather seamlessly into clinical practice. They have also hinted at the potential for AI to benefit patients in other ways.

Soon, AI tools could help predict future cancers and encourage early screening, giving oncologists a better opportunity to detect cancer early, when treatments are often more effective, and cures are more possible. The tools could also help identify biomarkers that could help personalize treatment decisions and make treatments more effective.

As with all things AI, the potential comes with risk, including risks of misdiagnosis, misuse, or bias. Dana-Farber researchers are working to reduce that risk and to safely and effectively advance AI for cancer diagnostics and prediction, to benefit cancer patients everywhere.

"I think oncology is one of the spaces where we can actually use AI for good," says Dana-Farber data scientist Bill Lotter, PhD. "As much as people are worried about AI more generally, it's exciting to think about these applications that we know could have clinical benefit."

AI Companions Aid Cancer Diagnostics

Bill Lotter, PhD

A small-yet-growing number of AI-based medical technologies are already approved by the US Food and Drug Administration to help human radiologists assess cancer screening scans such as mammograms, CT scans, and MRIs looking for signs of breast, lung, or liver cancer.

"AI has made a lot of progress in augmenting workflows that already exist," says Lotter. "It's not reinventing the wheel…yet."

Lotter shepherded one of the first AI-based breast cancer diagnostic products through regulatory approval. The task wasn't as challenging as it could have been because the approach to regulatory approval for non-AI-based computational tools had already been established.

"We relied on the gold standard for FDA clearance for these types of products," says Lotter. "This approach has been around since the days of old-school computer-aided decision tools."

Lotter used what is called a "reader study" to evaluate his AI tools for breast cancer diagnostics. In a reader study, radiologists read mammography images in a retrospective dataset. They analyze each image once themselves and once with the AI. If the diagnostics are better with both the AI and the human working together, the AI adds a benefit.

"The breast imaging AI has fit very neatly into a place where we already have regulatory experience, we have clinical experience, we've got the infrastructure," says Rosenthal. "It's tidy and well done."

But when AI systems bring in new kinds of data and workflows, and have no regulatory history, the bar for entry into clinical practice necessarily rises.

Brian Wolpin, MD, MPH (left), and Michael Rosenthal, MD, PhD, review CT scans that were analyzed by AI.

AI's Potential to Screen for Cancer Risk

Brian Wolpin, MD, MPH

Rosenthal is working with lead investigator Brian Wolpin, MD, MPH, at Dana-Farber's Hale Family Center for Pancreatic Cancer Research, to use AI to identify individuals at high risk of developing pancreatic cancer. Screening for pancreatic cancer can be costly and invasive, but high-risk individuals could benefit from screening if it helps identify the cancer at an early stage when treatment could be more effective.

Wolpin and Rosenthal want to create an AI algorithm that could find risk signals in a patient's medical record and use them to assess whether a patient's risk of pancreatic cancer is in the screening range. "Without AI, those risk signals might go undetected, but together they could stack up to an increased cancer risk," says Rosenthal.

The research is in progress and has so far included the development of an AI model that reads CT scans to assess loss of muscle mass. That tool has enabled the team to determine that muscle mass loss often precedes a pancreatic cancer diagnosis.

Not everyone who loses muscle mass develops pancreatic cancer. For instance, a person recently admitted to the ICU might have decreased muscle mass, but that would likely be due to their ICU admission, and not because of an increased cancer risk. Wolpin and Rosenthal are now working to help refine the AI algorithm so it can differentiate between the various causes of muscle wasting.

They are training their AI models on over a million patient records that include medical codes, bloodwork, and more than half-a-million CT scans. It is not yet clear exactly what data will need to be integrated into the AI model to make it a reliable predictor.

"The overall framework is set, but there's a lot still to be determined," says Rosenthal.

Left to right: Pathology slide, nuclear grade heatmap, and immune infiltration heatmap

AI models process images of pathology slides and highlight areas of interest related to cancer and the immune system’s effort to defeat it.

The Explainability Challenge

This kind of iterative training and validation cycle is an essential part of assessing the value of the AI, says Lotter. "It may be the most important challenge in AI these days: How do we actually gather the confidence that our models work?"

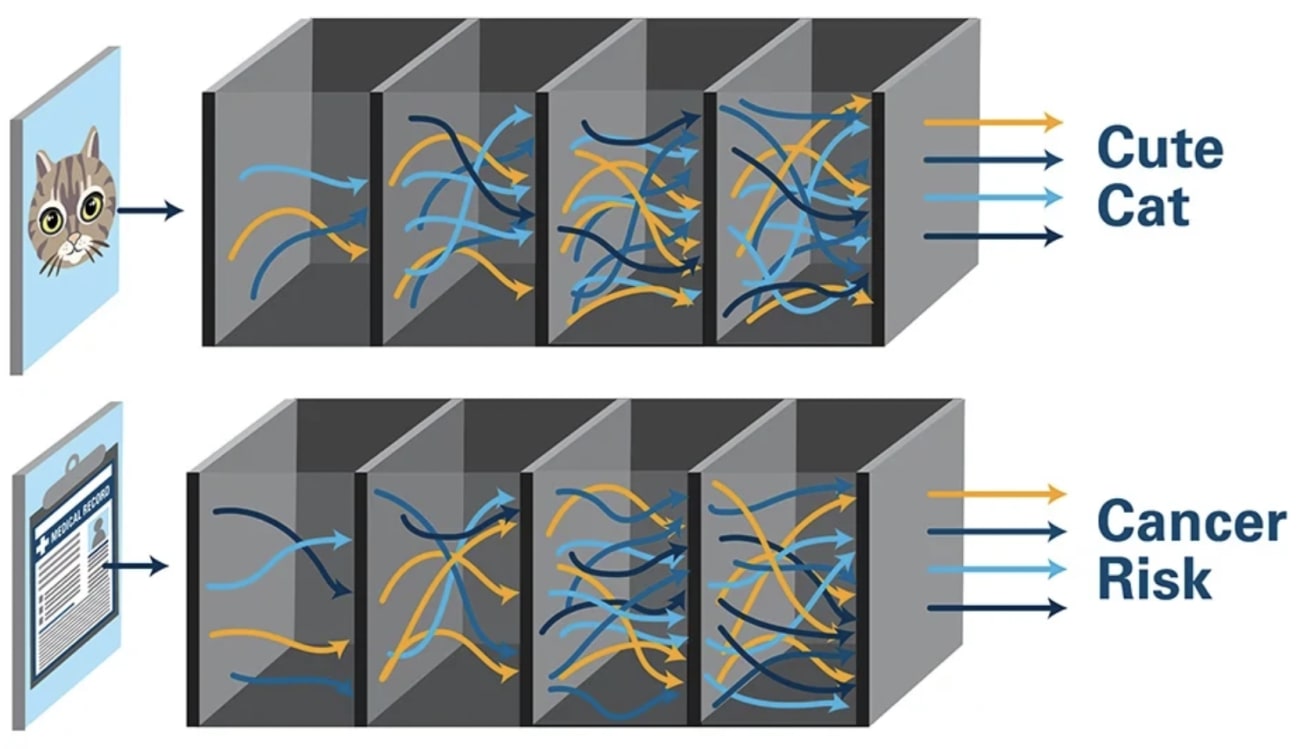

Model validation becomes more difficult as the AI algorithms become less explainable. AI experts refer to such AI models as "black boxes" because the logic inside is impossible to see. Lotter's AI for reading mammograms is relatively transparent; a radiologist can see its assessment on the mammogram. But Rosenthal might not know exactly what combination of data his AI used to assess a patient's risk.

"Explainability is a sanity check of the algorithm," says Lotter, who is investigating ways to make AI more interpretable or explainable, especially when algorithms fail or appear biased. "It gets more challenging with AI that is used in tasks that humans aren't already doing, that haven't been part of the standard clinical workflow."

Rosenthal's AI for predicting pancreatic risk, for example, is putting data together in an unprecedented way to make predictions that oncologists don't currently make. It hasn't been done before, so there isn't a clear vision for how such decisions could be made, how accurate they need to be, when such tools might be used, and by whom.

This uncertainty isn't a showstopper, says Rosenthal. The same unknowns often exist for new cancer drugs, which may start out being used for late-stage cancers and then, as oncologists learn more, end up being used earlier or in combinations.

It typically takes years to do the research needed to guide adoption of new medicines. AI development in oncology might move faster than drug development, but likely not as fast as AI in the consumer realm.

"I think AI will be disruptive to the medical system in some ways," says Rosenthal, who notes that AI is being developed for health care not only at places like Dana-Farber, but also at tech companies. "But ultimately, it's going to be reined in by traditional medical systems. The gatekeepers of medicine are not going to allow AI to take over patient care without high quality evidence."

Can AI Guide Cancer Treatment?

Dana-Farber researchers are also investigating ways that AI models can predict response to treatment to potentially guide treatment decisions.

Alexander Gusev, PhD

One investigation, led by Alexander Gusev, PhD, uses genomic data to predict the primary source of a patient's cancer. Another, led by Eliezer Van Allen, MD, chief of Population Sciences, assesses pathology slides of tumor samples of kidney cancer. The tool identifies previously underappreciated features, such as tumor microheterogeneity, that could help predict whether a tumor will respond to immunotherapy.

While these AI models have the potential to guide treatment, they are currently being used only for research purposes. They help generate hypotheses that can be tested and explored safely, without introducing AI into clinical practice.

Think of them as being at the stage of a discovery of an oncogene in a basic science lab. It opens the door to potential new treatment decisions, but a lot of research, development, and clinical testing must happen before that discovery leads to a medical intervention.

"The discovery science part of AI research is exciting because it gives us a chance to examine high-dimensional data in new ways, which could lead to unprecedented new discoveries," says Van Allen.

Eliezer Van Allen, MD

A key next step is to make AI models more robust and more diverse.

"We need very large datasets, and they need to be representative of all the patients an AI model would encounter in the real world," says Van Allen.

Such algorithms would need to be tested not in retrospective studies, like Lotter's reader study, but in prospective clinical trials that follow patients as they are experiencing cancer treatment. The designers of those trials will need to determine where the AI intervention fits into practice, what the current standard of care is in that workflow, and how to measure the AI in terms of safety and efficacy in comparison to that standard.

Envisioning an AI-Enabled Future

Dana-Farber excels at driving this kind of research. It is the kind of place where clinicians and scientists are constantly thinking through clinical workflows and applying evidence from studies to improve them.

"No one is more expert in cancer care," says Rosenthal. "We understand the intimacy of these life-altering decisions and the trust we need to build with patients."

AI technology is analyzing CT scans like those pictured here to detect loss of muscle mass, which may be a warning sign of pancreatic cancer.

Lotter, Rosenthal, and Van Allen are all cautiously optimistic that AI-based tools in the diagnostic space will bring benefits to patients. Lotter is also excited about Dana-Farber's proposed new in-patient cancer hospital and its potential to provide a first look at what an AI-enabled cancer hospital looks like.

"One of the big challenges of AI in health care is trying to retrofit AI into existing clinical systems," says Lotter. "With this new cancer hospital initiative, we could have an opportunity to build these systems from the ground up and really think ahead about the IT infrastructure we will need."

An AI model is trained on a large dataset and then validated on another large dataset of the same structure. When used in practice, the AI applies what it has learned to analyze never-before-seen data to make a prediction. That decision process happens inside what is sometimes called a "black box" because the logic is hidden and represents layers of smaller decisions.